By: Anton Abaya

Securing AI systems can feel overwhelming. The technology is moving fast, the risks are evolving even faster, and the guidance can be abstract or fragmented. Yet for many organizations, the path forward doesn’t require starting from scratch.

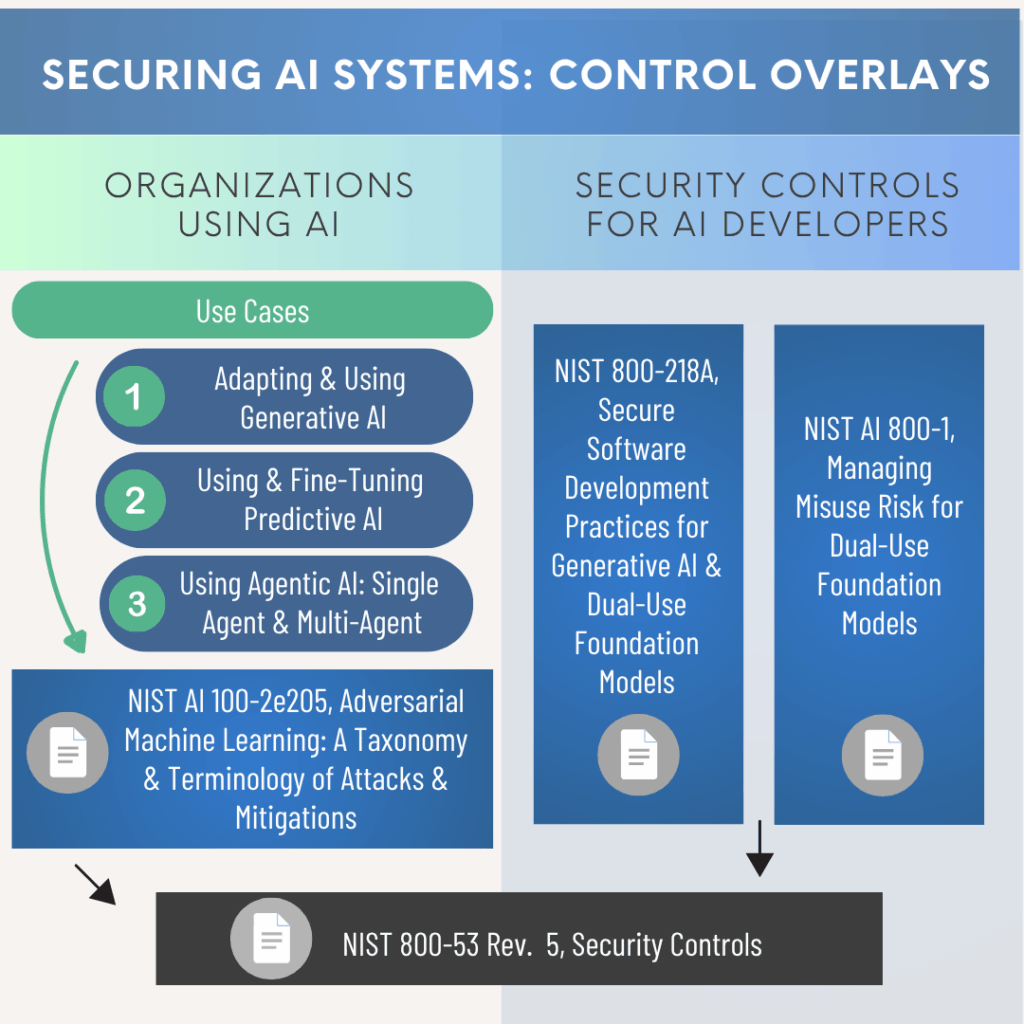

NIST’s new AI Cybersecurity Control Overlays, also referred to as the NIST Control Overlays for Securing AI Systems, are designed to meet security and risk teams where they already are: using established cybersecurity guidelines — especially NIST Special Publication (SP) 800-53 controls and the NIST Risk Management Framework (RMF) — and extending them to address AI-specific threats in a concrete, testable way.

This article recaps the NIST Control Overlays for Securing AI Systems (COSAiS) concept paper and recent NIST COSAiS project webinar while explaining how the forthcoming overlays can help organizations secure AI systems using the tools and processes they already know.

Why Comprehensive & Structured Strategies for Mitigating AI Security Threats Are Necessary

As Pellera Technologies’ Global CISO Sean Colicchio cautions, “There’s a blind trust that AI will solve the problems AI introduced… but the same people building the attack platforms are just as experienced as those building the defenses — it’s going to be a very quick arms race.”

The good news is that modern AI systems are still software. However, they introduce security challenges that look very different from those of traditional software applications. This is because:

- AI spans the full lifecycle. Training data, model weights, configuration settings, prompts, and post-deployment monitoring all introduce unique security challenges. Is the AI we deployed yesterday still operating under the original rules & requirements intended, or has it drifted?

- New attack classes are emerging. Prompt injection, data and model poisoning, model extraction, multi-agent misbehavior, and backdoor attacks are increasingly becoming part of the AI threat mitigation landscape. As we outsource and trust AI to perform tasks on our behalf, what precautions do we have if it malfunctions and what is the business or human-life impact?

- Existing guidance can be too high-level. Organizations may understand they need “trustworthy” or “responsible” AI, but still lack a concrete, control-level view of what to implement and how to assess it.

- AI is rapidly evolving and maturing. The AI arms race between nations or even between major corporations is real. Board mandated AI integration into every business process or system is now widespread.

At the same time, many enterprises already have mature security and privacy programs built around:

- The NIST SP 800-53 Rev. 5 controls

- Sectoral and regulatory obligations referencing these controls

- Established assessment processes using NIST SP 800-53A

COSAiS is intended to bridge the gap by turning AI-specific risk insights into SP 800-53-based overlays that are actionable, assessable, and aligned with existing enterprise risk management practices.

Related Read – How AI & App Code Help Open the Door to Attacks

What Is a Control Overlay (& Why Use It for AI)?

NIST defines a control overlay as an additional layer of customization applied to the SP 800-53 control baselines. Overlays can:

- Add, modify, or remove controls from a baseline.

- Clarify how controls apply to specific technologies, environments, missions, or sectors.

- Set parameter values for controls and control enhancements.

- Provide shared, community-vetted starting points for implementation.

Overlays are not new — NIST SP 800-53B includes guidance and examples — but COSAiS applies this approach specifically to AI systems. For AI, overlays offer several advantages, including the ability to:

- Leverage what’s already in place. Many organizations already implement SP 800-53 controls; overlays help them extend that same infrastructure to AI systems rather than creating a parallel framework.

- Focus on AI-specific risks. The controls needed to protect an AI model, its training data, and connected infrastructure are similar to those for other software, but the attack surface and misuse scenarios differ.

- Support consistent assessment. Because overlays build on SP 800-53 and SP 800-53A, they lend themselves to repeatable audits, evaluations, and assurance activities for AI.

- Promote interoperability and trust. A standardized overlay set for common AI use cases helps organizations communicate expectations across business units, partners, and regulators.

Anyone — enterprises, industry sectors, communities of interest, consortia — can use overlays to tailor controls to their AI use cases.

How COSAiS Fits Into NIST’s Broader AI & Cybersecurity Portfolio

COSAiS is not starting from a blank slate. It is an AI control framework designed to be an implementation-focused companion to other NIST efforts, including the:

- AI Risk Management Framework (AI RMF) – Delivers a high-level approach for establishing and maintaining trustworthiness in AI systems across the lifecycle.

- In-Development Cyber AI Profile – Will describe how organizations can adapt cybersecurity practices in light of AI — both in terms of using AI for cyber defense and protecting enterprises from AI-enabled threats.

- AI 100-2 E2025 – Adversarial Machine Learning – Defines AI attack and mitigation terminologies and taxonomies that inform which SP 800-53 controls should be prioritized for AI systems.

- SP 800-218A – Secure Software Development Practices for Generative AI and Dual-Use Foundation Models – Identifies critical model artifacts and secure development practices for AI developers.

- Draft AI 800-1 – Managing Misuse Risk for Dual-Use Foundation Models – Provides practices to manage risks of criminal misuse of advanced AI models.

COSAiS overlays will draw on these publications to map specific AI threats and misuse scenarios to concrete security controls.

Related Read – Understanding IT Compliance: Why Compliance Doesn’t Equal Security

NIST COSAiS: 5 Initial Use Cases for AI Security Overlays

The COSAiS concept paper proposes an initial library of overlays organized around five common use cases. Each overlay is intended to be used individually or in combination, depending on how an organization adopts AI.

1. Adapting & Using Generative AI – LLM Assistants

Audience: Organizations using generative AI to create new content (text, images, audio, video) for internal business augmentation, such as summarizing documents or analyzing data.

Scenarios covered:

- On-premises hosted LLMs with retrieval-augmented generation (RAG) over:

- Proprietary local data.

- Mixed local and web data.

- Web-only data.

- Third-party hosted LLMs with similar data patterns.

Security focus: Mitigating threats like prompt injection, data leakage via prompts and outputs, data poisoning in RAG sources, and compromise of connected resources — while protecting the confidentiality, integrity, and availability of both the model and underlying data.

2. Using & Fine-Tuning Predictive AI

Audience: Organizations using predictive AI and machine learning for automated or semi-automated decision-making, such as recommendation engines, classification services, or workflow automation.

Example workflows include:

- Automating a business process using a third-party hosted model with proprietary input data.

- Using a third-party hosted model with public-facing input data to identify potential malicious activity.

- Running an on-premises model with proprietary data for credit underwriting.

- Using an on-premises model with public-facing input data to triage resumes for hiring.

Each scenario is examined across the predictive AI lifecycle:

- Model training

- Model deployment

- Model maintenance and updates

The overlays will highlight where AI-specific risks arise — such as training data manipulation, model drift, and misuse of automated decisions — and point to the SP 800-53 controls that can manage those risks.

3. Using AI Agent Systems – Single Agent

Audience: Organizations deploying AI agents to automate business tasks and workflows with limited human supervision.

Example scenarios:

- Enterprise Copilot: Connected to a user’s enterprise context (emails, files, calendars, CRM, IT systems), able to take actions like creating calendar events or initiating IT requests, often via standardized protocols like the Model Context Protocol (MCP).

- Coding Assistant: An agent that understands the enterprise codebase and can:

- Access and edit source repositories

- Create and commit pull requests

- Write and run tests

- Browse proprietary and web resources

- Assist with deployment steps

These agents blend LLM capabilities with action-taking permissions and deep access to sensitive systems. Overlays will focus on fine-grained access control, separation of duties, change management, monitoring, and rollback mechanisms tailored to agent behavior.

4. Using AI Agent Systems – Multi-Agent

Audience: Organizations looking to orchestrate multiple AI agents working together on complex workflows.

Example scenario: Robotic Process Automation (RPA) for expense reimbursement, where multiple agents:

- Extract data from receipts and forms

- Validate claims against company policies (often using RAG)

- Route approved claims for payment

Multi-agent systems introduce additional risks around coordination, emergent behavior, and cascading failures. Overlays for this use case will highlight controls to manage inter-agent communication, enforce guardrails, and monitor the overall system for unexpected behavior.

5. Security Controls for AI Developers

Audience: AI developers, especially those building or fine-tuning generative and dual-use foundation models.

This overlay will map SP 800-53 controls to:

- SP 800-218A – Secure Software Development Practices for Generative AI and Dual-Use Foundation Models

- Draft AI 800-1 – Managing Misuse Risk for Dual-Use Foundation Models

The goal is to help AI developers systematically protect critical model artifacts — such as training data, evaluation datasets, system prompts, weights, and deployment configurations — using familiar control language that is auditable.

Related Read – Top 10 Agentic AI Examples & Use Cases

How to Apply NIST’s COSAiS Methodology

The COSAiS project follows a straightforward, repeatable methodology:

1. Identify the AI Use Case

Clarify what type of AI system is in scope (e.g., GenAI assistant, predictive model for hiring, single-agent copilot), including assumptions about hosting, data sources, and users.

2. Conduct an AI Cybersecurity Risk Assessment

Using the concept paper and NIST’s AI 100-2 E2025 – Adversarial Machine Learning taxonomy, identify AI-specific risks for that use case. Examples may include:

- Data or model poisoning

- Prompt injection or indirect prompt injection

- Model extraction or membership inference

- Backdoor attacks

- Leaks from user interactions

- Compromise of connected systems

3. Identify & Tailor SP 800-53 Controls

Map risks to relevant controls and tailor them to the AI context. For example, you might consider tailoring controls like the following to manage model poisoning risk:

- AC-6, Least Privilege

- CM-2, Baseline Configuration

- CM-3, Configuration Change Control

- SC-28, Protection of Information at Rest

- SC-32, Information System Partitioning

- SI-3, Malicious Code Protection

- SI-4, System Monitoring

- SI-7, Software, Firmware, and Information Integrity

- SI-7(1), Software, Firmware, and Information Integrity | Integrity Checks

- SI-10, Information Input Validation

- SR-3, Supply Chain Controls and Processes

Tailoring may include scoping, using compensating controls, specifying parameter choices, and incorporating additional implementation details.

4. Perform a Gap Analysis

Compare the AI-specific control set to controls already in place at the organizational and system level. Ask questions like:

- Where are existing controls already mitigating AI risks?

- Where do AI use cases introduce new security requirements or tighter control needs?

- Which components (model, data pipelines, connectors, agents, infrastructure) remain under-protected?

The outcome should be an overlay that can be applied to similar systems across an enterprise or shared across a community of interest.

Related Listen – Edge of IT Podcast Season 2, Episode 6 – Beyond Compliance: How Threat Intelligence is Shaping Cybersecurity Resilience

Next Steps: Turning Familiar Controls Into AI Security Action

AI is changing quickly, but the core principles of cybersecurity — protecting confidentiality, integrity, and availability — remain constant. COSAiS is about translating those principles into concrete, reusable control overlays that:

- Focus on real AI use cases.

- Build on SP 800-53 controls and existing enterprise processes.

- Draw from NIST’s broader portfolio on AI risk, misuse, and adversarial threats.

- Help organizations implement and assess AI security in a consistent, interoperable way.

NIST is actively seeking COSAiS project feedback and discussion via email or its dedicated Slack channel. Based on community response, NIST plans to begin with one of the use cases and aims to release a public draft overlay in early FY26, followed by a public workshop during the comment period.

For information security and risk officers, AI developers, and compliance leaders, this is an opportunity to shape practical, standards-based guidance that will influence how AI systems are secured across all enterprises and sectors.

In the meantime, if your organization is deploying or developing AI systems — or planning to — now is the moment to reach out to the AI and cybersecurity experts at Pellera Technologies for help securing your systems.